|

|

Post by Admin on Apr 6, 2023 15:55:04 GMT

We know the question that keeps you awake at night: when did humans start eating snails? Well, researchers have recently discovered the earliest evidence of prehistoric people cooking and eating these terrestrial mollusks. But while you might imagine a rustic version of modern escargot, the snails in question were actually enormous in comparison. A team of researchers from the University of Witwatersrand in Johannesburg, South Africa, have found shell fragments of land snails from the Achatinidae family – which can grow to 16 centimeters (6.3 inches) long – at Border Cave, located on a cliff near South Africa's border with Eswatini. The site has been excavated on multiple occasions since the 1930s, but it was during work conducted between 2015 and 2019 that the shell fragments of giant land snails were found. The shell bits, recovered in relative abundance, appeared in multiple layers of sediment dating from 70,000 to 170,000 years ago. They also come in a range of colors, “from lustrous beige to brown and matt grey”, the authors write, which occurs when the shell is heated. According to the team, invertebrate animals, such as snails, make up more than 95 percent of Earth’s biodiversity, but they are often overlooked in archaeological research. This is because they are seen as largely unimportant for our understanding of the history of human behavior. This marginalism is made worse by how small most specimens are, which means they have less chance of surviving in the archaeological record. But snails can be a useful exception due to their shells. Land snails can appear in excavation sites because they naturally occur in the area, where they bury themselves in the soil to avoid dehydration, or because they were introduced through human agency – for eating or to use their shells for different purposes (for jewelry or religious practices). Other research has shown that snail consumption appears in dig sites dated to about 30,000 years ago in Europe and around 40,000 years ago in Africa. This represents a “huge gap” for the study’s findings, study author Marine Wojcieszak told New Scientist. “Terrestrial molluscs are an excellent source of nutrients” the authors write, “they are easy and not dangerous to collect, they can be stored for some time before being consumed, they are simple to prepare and to digest as long as one has a basic mastery of fire”. Given that hominins have been using fire for at least 400,000 years, it is easy to see how the latest finds fall within the realm of possibility. In fact, there is evidence that we were baking fish over 780,000 years ago. To test their hypothesis that the snails were on site due to human consumption, the team took shells from modern land snails and broke them into fragments. The fragments were of different sizes and colors and were experimentally heated for periods of time between 5 minutes to 36 hours. This provided a valuable source to compare with their prehistoric counterparts. Exposure to higher temperatures and longer periods of heating turned previously white fragments into a more “snow white” shade, while beige and brown samples turned white and grey. The heating process also removed the shells’ gloss because the organic matter responsible for this sheen in the shells’ structure was burnt out. The shells also showed extreme signs of micro-cracking, which is caused by heating. The same patterns were present on the prehistoric shells found at the site in South Africa. “Microscopic analysis of the modern heated shells and archaeological specimens from Border Cave shows that they share features resulting from exposure to heat, namely micro-cracking and a matt surface appearance,” the authors state. “This finding, and the fact that most of the archaeological specimens derive from combustion features demonstrates that these shell fragments were most likely heated.” Although the possibility that the shells appeared at the site through natural behavior – such as the snails burrowing into the soil where they were accidentally heated by a fire – cannot be ruled out, human agency does seem to be the most likely cause. This idea is strengthened by the remains of other potential food items, such as seeds and bones, which were found nearby, Wojcieszak told New Scientist. Plus, the cave itself is too isolated for the food leftovers to have appeared on their own. The results don’t just tell us about our ancestors' eating habits, they also allow us a “glimpse into the potentially complex social life of early Homo sapiens”, the authors conclude. The study is published in Quaternary Science Reviews. www.sciencedirect.com/science/article/abs/pii/S0277379123000781?via%3Dihub#bib42 |

|

|

|

Post by Admin on Apr 7, 2023 19:02:08 GMT

Evidence for large land snail cooking and consumption at Border Cave c. 170–70 ka ago. Implications for the evolution of human diet and social behaviour

Abstract

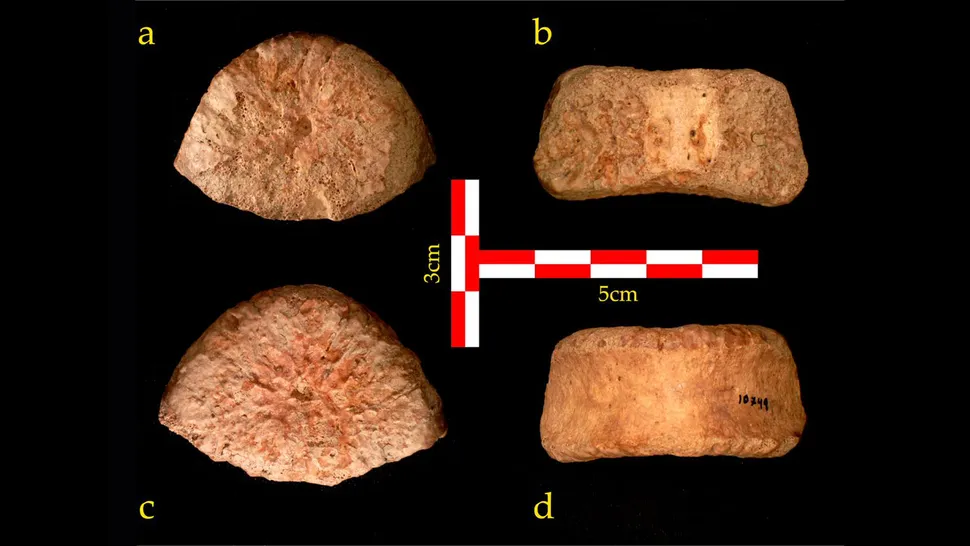

Fragments of land snail (Achatinidae) shell were found at Border Cave in varying proportions in all archaeological members, with the exception of the oldest members 5 WA and 6 BS (>227,000 years ago). They were recovered in relatively high frequencies in Members 4 WA, 4 BS, 1 RGBS and 3 WA. The shell fragments present a range of colours from lustrous beige to brown and matt grey. The colour variability can occur when shell is heated. This possibility was explored here through experimental heating of giant land snail shell fragments (Achatinidae, Metachatina kraussi - brown lipped agate snail) in a muffle furnace from 200 to 550 °C for different lengths of time. Colour change, weight loss, and shattering of the heated samples were recorded. Transformation of aragonite into calcite and the occurrence of organic material was investigated by means of Infrared and Raman spectroscopy. Scanning electron microscopy was also used on selected specimens to help identify heat-induced transformation as opposed to taphonomic alteration. The identification on archaeological fragments of features produced by experimentally heating shells at high temperatures or for long periods has led us, after discarding alternative hypotheses, to conclude that large African land snails were systematically brought to the site by humans, roasted and consumed, starting from 170,000 years ago and, more intensively between 160,000 and 70,000 years ago. Border Cave is at present the earliest known site at which this subsistence strategy is recorded. Previous research has shown that charred whole rhizomes and fragments of edible Hypoxis angustifolia were also brought to Border Cave to be roasted and shared at the site. Thus, evidence from both the rhizomes and snails in Border Cave supports an interpretation of members of the group provisioning others at a home base, which gives us a glimpse into the complex social life of early Homo sapiens.

Introduction

When, how, and why terrestrial molluscs became a constituent part of the diet of our ancestors are questions that are still largely unanswered and that puzzle researchers in many respects. Invertebrate animals represent more than 95% of earth's biodiversity (Herbert and Kilburn, 2004), but they are often not studied in archaeological assemblages because they are considered marginal for the understanding of past human behaviour (Mannino, 2019). In addition, most of them are small and have little chance of surviving in the archaeological record. One of the most common remains is from soft bodied animals with mineralised exoskeletons; molluscs.

Land snails may be present in archaeological sites because they occur naturally in the sediments or because they were introduced by the occupants to consume their flesh and/or to use their shells as raw materials or as body ornaments (Mannino, 2019). Although the shell was often used for bead-making in African Iron Age contexts in the late Holocene, older symbolic use may have occurred too, as evidenced by an Achatina shell fragment interpreted as engraved with a criss-cross pattern, recently found at Txina-Txina, southern Mozambique, in layers dated 27.4–26.8 ka cal BP (Bicho et al., 2018).

Many terrestrial molluscs bury themselves deep in the soil to avoid dehydration during dry winters (Herbert and Kilburn, 2004), so they can be found naturally in sediments, or they can be brought to a site by their numerous predators. Adverse climatic events can also cause the death of snails in their natural habitats and consequently their shells may be incorporated in cave and shelter deposits through geological processes. Multiple uses of land snail shell have been investigated from an ethnoarchaeological point of view: including use as tools for agricultural, fishing, hunting or household purposes, or as decorations or ritual implements

(Lubell, 2004a; Mannino, 2019). Snail shells have been discovered in numerous African sites, including Border Cave, the subject of this volume.

At Haua Fteah Cave in Libya, North Africa, preliminary analysis suggests that small land snails were consumed occasionally from 40 to 15 ka cal BP, and more regularly from 14 to 10 ka cal BP (Barker et al., 2012). The site of Taforalt (Grotte des Pigeons) in Morocco, Northwest Africa, records land snail consumption from 13 to 11 ka BP (Taylor et al., 2011). In Beirut in the Levant the site of Ksar ’Aqil has yielded an accumulation of land snails dated 23–22 ka BP (Mellars and Tixier, 1989). In Europe, Klissoura Cave 1 in Greece records land snails with broken lips that are interpreted as evidence of human consumption, in levels dated between 36 and 28 ka cal BP (Kuhn et al., 2010). Nearby, Franchthi Cave documents land snail consumption between 15 and 12 ka cal BP (Stiner and Munro, 2011). The site of Cova de la Barriada in Spain preserves compelling evidence for land snail consumption in Europe from 31.3 to 26.9 ka cal BP (Fernández-López de Pablo et al., 2014). Here land snails were found in three different levels in association with combustion features, lithic and faunal assemblages. Charcoal analysis revealed that the snails were roasted in ambers of pine and juniper. X-Ray diffraction analysis showed that all specimens presented aragonite (CaCO3) as the only mineral phase, indicating that the heating temperature was insufficient to promote the formation of calcite (Parker et al., 2010). The site of Cueva de Nerja, also in Spain, records land snails throughout the stratigraphic sequence, including Gravettian (28–19 ka) and Solutrean (22–17 ka) deposits (Aura Tortosa et al., 2012; Jordá et al., 2011), while the Italian site of Grotta della Serratura evidences land snail consumption in Epigravettian layers dated 14.1–13.7 ka yrs cal BP (Martini et al., 2009). Edible land snails are often abundant in late Pleistocene and Holocene archaeological sites, and have been found in the Caribbean, Peru, parts of North America, East Africa, Sudan, Nigeria, the Philippines and throughout the Mediterranean region, where they become a substantial part of the human diet (Lubell, 2004b). The consumption of land snails continues today in the Mediterranean Basin in Spain, France, Italy, Portugal, Algeria, Morocco, and Tunisia. Land snails are also consumed in Nepal, Southeast Asia and Northeast India, and there is a growing demand in South America.

The above review of the evidence highlights, however, what can be perceived as a conundrum in the history of our lineage's adaptation. Terrestrial molluscs are an excellent source of nutrients, they are easy and not dangerous to collect, they can be stored for some time before being consumed, they are simple to prepare and to digest as long as one has a basic mastery of fire, certainly acquired by hominins at least 400,000 years ago. However, and contrary to what one might expect, the systematic consumption of terrestrial molluscs does not seem to date back to before 49 ka in Africa and 36 ka in Europe, and does not become an essential component of the diet of most human populations before 15–10 ka. Although terrestrial molluscs, especially those of small dimension, are subject in some sedimentary contexts to taphonomic processes that can damage or destroy them, it is difficult to attribute the recent exploitation of terrestrial molluscs to taphonomic causes. For this reason, the dramatic intrusion of this food source in the late Pleistocene or, depending on the region, early Holocene, has traditionally been interpreted as a consequence of an expansion of subsistence resources that would have developed at that time, probably due to demographic pressure. This “broad spectrum revolution” (Flannery, 1969) would have aimed at the systematic exploitation of all available resources, and in particular small prey such as small mammals, fish, reptiles and molluscs (Flannery, 1969; Stiner, 2001; Zeder, 2012). This vision has tended to attribute possible evidence of older exploitation of terrestrial molluscs to the many natural phenomena that can lead the remains of these animals to be incorporated into sedimentary sequences or, at most, to sporadic consumption that does not substantially change the classical vision of the evolution of the diet of our genus. It is quite possible, however, that this mainstream narrative reflects only the best-documented and archaeologically visible part of a more complex process that has involved, since the mastery of fire, the exploitation of certain nutritious species that were only available in certain ecological settings. It is also possible that these subsistence strategies were lost in some cases and reacquired in others. Because of its particular characteristics, Achatina (giant African land snail) may have been a continuously exploited species in certain regions of Africa, and may have been an important part of the diet of Middle Stone Age (MSA) populations long before the “broad spectrum revolution”. This point of view is supported, but not really substantiated, by archaeological evidence that predates our work at Border Cave. The earliest possible habitual consumption of land snails in Africa, from Mumba-Höle Cave in Tanzania, East Africa, from level V upper (Lubell, 2004b), dated to 49.1 ± 4.3 ka (Gliganic et al., 2012) consists of Achatina sp. shells found in association with lithic artefacts (Mehlman, 1979), though a description of the snail assemblage is yet to be published. An even older possible consumption of this species comes from the Kenyan site of Panga Ya Saidi (Martinón Torres et al., 2021). These authors report large frequencies of Achatina sp. fragments, many of which bear traces of heating in the form of distinct blackening, in layers dated c. 78 ka, in which a burial of a three-year-old child was discovered. More recent evidence for possible MSA exploitation of Achatina comes from Sibudu Cave (Plug, 2004), Bushman Rock Shelter (Badenhorst and Plug, 2012) and Kuumbi Cave, Zanzibar (Faulkner et al., 2019; Shipton et al., 2016).

The Achatinidae family incorporates the largest land snails and KwaZulu-Natal has a relatively rich agate snail fauna, including 15 species (Herbert and Kilburn, 2004). They are herbivores, but also consume soil, calcareous rocks, the shells of other molluscs and bone from carcasses to form their own shells made of the aragonite calcium carbonate (CaCO3) polymorph (Herbert and Kilburn, 2004). This large gastropod is still a popular food in West African countries including Cameroon, Ghana and Nigeria (Griveaud, 2016), but not in South Africa among rural people living in Nkungwini near Border Cave (personal communication by B. Vilane to L.W. in 2016). In some parts of Africa, Achatina snails are believed to contain various curative properties, and the bluish liquid (haemolymph) obtained from the shell reportedly helps with infant development (Ugwumba et al., 2016; Munywoki, 2022). Snail meat is highly nutritious; it is rich in protein, iron, potassium, phosphorous, magnesium, selenium, calcium, copper, sodium, zinc, Omega-3 and other essential fatty acids, and various vitamins, including vitamin A, Thiamine (B1), Riboflavin (B2), Niacin (B3), vitamin B6, B12, C, D, E and K, while being low in fat (Aboua, 1990; Fagbuaro et al., 2006). An adult Achatina snail may have a mass of close to a kilogram, so it provides a substantial protein source. Recent studies (Dumidae et al., 2019) suggest that humans can contract a parasitic disease caused by a worm hosted by the giant African land snail. Careful handling may prevent disease. Of course, we do not know whether the parasite is a modern phenomenon or whether humans in the past were also vulnerable to disease when handling the snails.

The objective of this study is to use the results of new high-resolution excavations at Border Cave and appropriate analytical methods to test the hypothesis that Achatina snails were consumed systematically by human groups in Border Cave between about 170 ka and 70 ka ago (and occasionally thereafter) and discuss the role that this giant land snail may have played in early modern human cultural adaptations.

|

|

|

|

Post by Admin on Apr 14, 2023 18:39:25 GMT

Homo sapiens wore leather in Europe 39,600 years ago, according to research done on bone. The study of an ancient bone from Spain shows that a strange pattern of notches hints that they used the bone to make holes in the leather. As reported by New Scientist, the bone was found at a location named Terrasses de la Riera dels Canyars near Barcelona, Spain, and it came from the hip of a huge creature like a horse or bison. On its flat surface, there are 28 puncture marks, including a linear row of 10 holes spaced roughly 5 millimeters apart as well as additional holes in more haphazard locations. %2F2023%2F04%2F13%2Fimage%2Fjpeg%2FtSFUcfiywVA4m9kInJx2Uv8bFmat0jaSqFYXmdwY.jpg&w=828&q=75) “We do not have much information about clothes because they’re perishable,” says Luc Doyon at the University of Bordeaux, France, who led the study. “They are an early technology we’re in the dark about.” As Doyon suggested, this pattern was "highly intriguing" because it didn't seem to be a decoration or a representation of a counting tally, which are the typical explanations for the intentional placement of lines or dots on prehistoric artifacts. 10 indents were created by a single tool Microscopic examination showed that the line of 10 indents was created by a single tool, and the other dots were created through five separate tools at various periods. The researchers employed a method known as experimental archaeology, in which you experiment with various historical tools to discover how markings were formed. According to the experts, the indents' most plausible cause is that they were created during the production or repair of leather goods. Doyon advises piercing a hole in the animal hide to create a tight seam and using a pointed tool to force a thread through the material. “It’s a very significant discovery,” says Ian Gilligan at the University of Sydney, Australia. “We have no direct evidence for clothes in the Pleistocene, so finding any indirect evidence is valuable. The oldest surviving fragments of cloth in the world date from around 10,000 years ago.” This revelation aids in resolving the riddle surrounding the invention of tailored clothing. Although eyed needles haven't been discovered in this area before about 26,000 years ago, and even then, they weren't strong enough to repeatedly pierce thick leather, which begs the question of how these prehistoric people managed to make clothing to fit them. Homo sapiens arrived in Europe around 42,000 years ago. The study was published in Science Advances on April 12. www.science.org/doi/10.1126/sciadv.adg0834Study abstract: Puncture alignments are found on Palaeolithic carvings, pendants, and other fully shaped osseous artifacts. These marks were interpreted as abstract decorations, system of notations, and features present on human and animal depictions. Here, we create an experimental framework for the analysis and interpretation of human-made punctures and apply it to a highly intriguing, punctured bone fragment found at Canyars, an Early Upper Palaeolithic coastal site from Catalonia, Spain. Changes of tool and variation in the arrangement and orientation of punctures are consistent with the interpretation of this object as the earliest-known leather work punch board recording six episodes of hide pricking, one of which was to produce a linear seam. Our results indicate that Aurignacian hunters-gatherers used this technology to produce leather works and probably tailored clothes well before the introduction of bone eyed needles in Europe 15,000 years later. |

|

|

|

Post by Admin on Apr 21, 2023 19:13:17 GMT

Ancient Humans and Present Day It’s unlikely that human lifespan — or how long a human can live if violence, disease or natural disaster doesn’t creep into the picture — has changed in a measurable way for tens of thousands of years. But it’s difficult to verify this one way or another, since violence, disease, natural disasters or a host of other problems usually do come into the picture if you spend enough time on this planet. “[Early humans] used to live, have children, then die immediately from disease or predation,” says Marios Kyriazis, a biomedical gerontologist at the National Gerontology Center in Cyprus. While there are reports in the past of people living up to 150 years, most of this is likely exaggeration, says Walter Scheidel, a historian at Stanford University who studies the demographics of the Roman empire. Read More: When Did Humans Start to Get Old? “Nature made the human body in a way to have children, live for a few more years to see our children growing up, and maybe reach the age where we can see grandchildren,” says Kyriazis, who authored the study “Aging Throughout History: The Evolution of Human Lifespan” published in the Journal of Molecular Evolution. The average number of people who reach the age of seeing their grandchildren has increased though, thanks to better hygiene, medicine and nutrition. But the chronic degenerative diseases and other issues are harder to stop — nature doesn’t allow our body to make continuous repairs past a certain point, Kyriazis says. Rough Trends for Ancient Human Life Spans The research on ancient human life spans is difficult because we haven’t found enough remains together to draw solid conclusions. But some research shows the life expectancy wasn’t that different between Neanderthals and early humans that have been found in western Eurasia. Other research reveals that the lifespan of Homo sapiens may have changed from the Middle Paleolithic to the later Upper Paleolithic, since the ratio of older to younger remains increases. The same research shows that starting about 30,000 years ago at the beginning of the Upper Paleolithic, the average lifespan began to push past 30 years. Another big change coincides roughly with the period between which humans began to adopt sedentary lifestyles 10 millennia or so ago, moving into urban centers like Çatalhöyük in Turkey. A more nomadic lifestyle was relatively healthier than living in denser towns back then, since urban areas lacked proper sewage systems and hygiene. As people lived in closer proximity to each other and their livestock, disease could transmit more easily between humans and domesticated animals. Some research at Çatalhöyük suggests that as the city became denser, people were less healthy, likely leading to a drop in average life expectancy. “The capacity of people to live longer remained, but many people died from disease and diet,” says Kyriazis. By Roman times, records were a lot better in some cases. Scheidel has examined census records for Egypt when it was a province in the Roman Empire about two millennia ago. These records show that overall, the average life expectancy was in the 20s. Of course, many people lived longer than this — the overall number is drawn down largely because infant mortality was high back then. For people who made it past the age of five, the life expectancy goes up to somewhere in the 40s, Scheidel says. An analysis of Roman emperors, for which we have a lot of information, reveals similar statistics. “The ones that don’t get murdered, which is a minority, they have more or less the same life expectancy,” Scheidel says. Which Average? Just as it must have been in the past, this life expectancy isn’t distributed equally. Hong Kong boasts the highest average life expectancy at 85.3 years, according to Worldometer. The Central Africa Republic suffers from the lowest at 54.4 years, due to a combination of factors that include deaths from COVID-19 and other diseases and an ongoing civil conflict, according to recent research. The overall life expectancy of humans today is 73.2 years — 75.6 years for females and 70.8 years for males. This has increased a lot in just a few decades, due in part to advances in medicine. The data shows that in 1950, the average life expectancy was 47 years. These improvements in life expectancy have led to a new focus, Kyriazis says. Where humans used to mostly be concerned with having more children to survive you, now many people have less children, and focus more on personal survival. “For the first time in human history, we see the phenomenon where we shift from survival of children to our own survival,” he says. |

|

|

|

Post by Admin on May 1, 2023 17:19:50 GMT

A 1.5 million-year-old vertebra from an extinct human species unearthed in Israel suggests that ancient humans may have migrated from Africa in multiple waves, a new study finds. Although modern humans, Homo sapiens, are now the only surviving members of the human family tree, other human species once roamed Earth. Prior work revealed that long before modern humans made their way out of Africa as early as about 270,000 years ago(opens in new tab), now-extinct human species had already migrated from Africa to Eurasia by at least 1.8 million years ago, during the early parts of the Pleistocene (2.6 million to 11,700 years ago), the epoch that included the last ice age. Scientists had debated whether ancient humans dispersed from Africa in a one-time event or in multiple waves. Now, researchers have discovered the latter scenario is more likely, based on a newly analyzed vertebra from an unknown human species. At about 1.5 million years old, the vertebra is the oldest evidence yet of ancient humans in Israel, study lead author Alon Barash, a paleoanthropologist and human anatomist at Bar-Ilan University in Israel, told Live Science. In 2018, after reexamining bones initially unearthed in 'Ubeidiya in 1966, the scientists discovered what appeared to be a vertebra from the lower back of a hominin, the group that includes humans, our ancestors and our closest evolutionary relatives. "It's great to see new discoveries coming from old collections like this one," John Hawks, a paleoanthropologist at the University of Wisconsin-Madison who was not involved with the study, told Live Science. "It shows that there is always something left to find even when archaeologists think they've done it all." After the researchers compared the vertebra with those from a range of animals — such as bears, hyenas, hippos, rhinos, horses, gorillas and chimps — that once lived in the 'Ubeidiya region, the team concluded that the bone came from an extinct species of human. (There is not enough data from this one bone to reveal whether it belonged to any known species of extinct human.) Based on the bone's size, shape and other features, the researchers estimated it belonged to a 6- to 12-year-old child. However, they estimated that at death, the child would have stood about 5 feet, 1 inch (155 centimeters) and weighed about 100 to 110 pounds (45 to 50 kilograms) — as large as an 11- to 15-year-old modern human. In other words, this child would have been head and shoulders taller than its modern counterparts.  "The study shows how much information about an ancient individual we can get from a small piece of the anatomy," Hawks said. Roughly 1.8 million-year-old human fossils previously unearthed in Dmanisi, Georgia, suggested those extinct humans were small-bodied hominins about 4 feet, 9 inches to 5 feet, 5 inches (145 to 166 cm) in height and 88 to 110 pounds (40 to 50 kg) in weight as adults. In contrast, scientists analyzing the 'Ubeidiya vertebra suggested that in adulthood, that person might have reached even greater heights: 6 feet, 6 inches (198 cm) and 220 pounds (100 kg). These findings indicate that the 1.8 million-year-old fossils previously found in Dmanisi and the 1.5 million-year-old fossil in 'Ubeidiya belonged to two different kinds of hominins. As such, ancient humans likely departed Africa in more than one wave, the researchers said. Other differences between the Dmanisi and 'Ubeidiya specimens also suggest they belonged to different human groups. For instance, the kinds of stone tools found in Dmanisi, known as Oldowan, were relatively simple, usually made from one or a few flakes chipped off with another stone. In contrast, those found at 'Ubeidiya, known as early Acheulean, were more complex, including hand axes made from volcanic rock. In addition, climates differed at Dmanisi and 'Ubeidiya — Dmanisi was drier, with a savanna habitat, whereas 'Ubeidiya was warmer and more humid, with woodland forests. As such, the scientists could imagine a scenario based on these sites in which distinct human species occupied different habitats and produced different tools. The scientists detailed their findings online Feb. 2 in the journal Scientific Reports(opens in new tab). doi.org/10.1038/s41598-022-05712-y |

|

%2F2023%2F04%2F13%2Fimage%2Fjpeg%2FtSFUcfiywVA4m9kInJx2Uv8bFmat0jaSqFYXmdwY.jpg&w=828&q=75)